Key Points

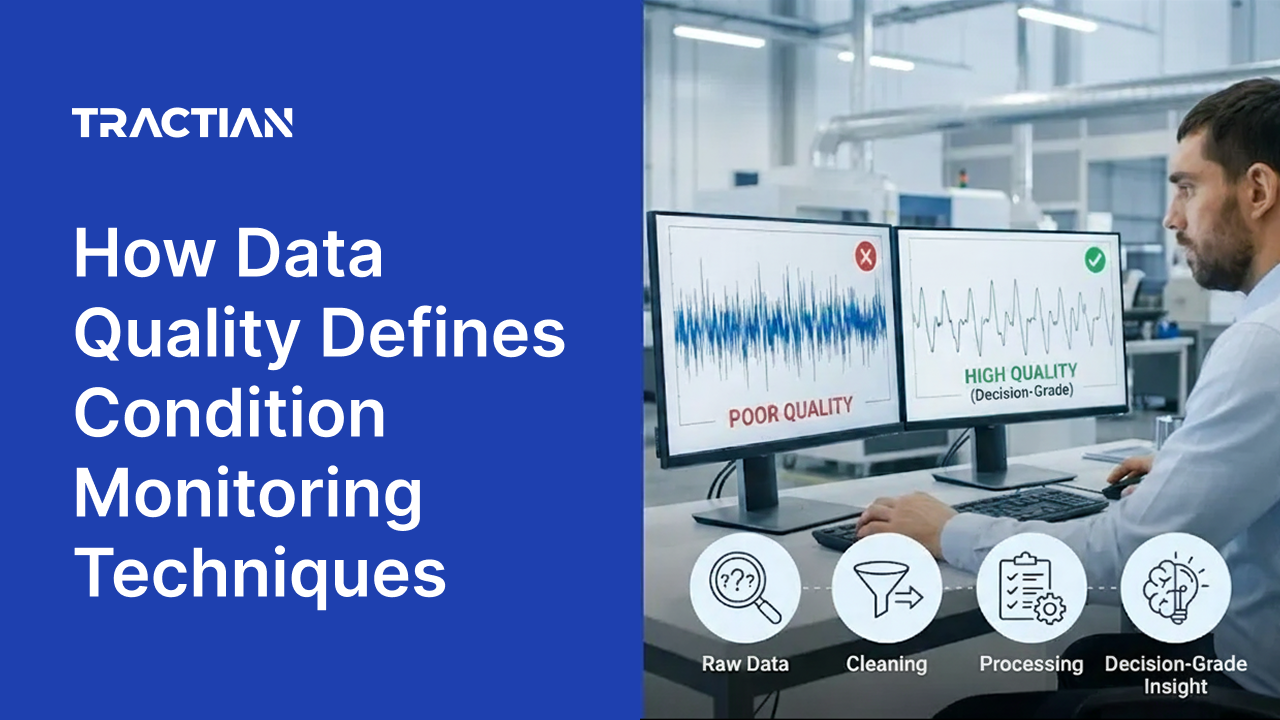

- Condition monitoring techniques don't fail because the method is wrong. They fail because the data feeding them lacks the resolution, frequency, or context to support a confident diagnosis.

- Data quality in condition monitoring has specific, measurable dimensions. They are sampling frequency, sensor resolution, contextual metadata, and capture consistency.

- When data quality is insufficient, teams compensate by using manual verification routes, handheld checks, and senior analyst interpretation, creating hidden costs that undermine the program's value.

- Decision-grade data quality is the threshold where technique outputs can be acted on without secondary verification, and it should be the standard that teams evaluate against.

The Variable Upstream of Every Technique

The effectiveness of any condition monitoring technique is governed by the quality of data feeding it, not the sophistication of the method itself.

Condition monitoring techniques are well understood in principle. Vibration analysis, oil analysis, thermography, and ultrasonic/ultrasound. Most reliability teams can list them, define their applications, and explain when each one applies.

The conversation that rarely happens is what separates the same technique producing a confident diagnosis in one facility from producing an ambiguous alert in another. The technique doesn't change between those two scenarios. The data does.

This distinction matters more than most programs acknowledge. According to IoT Analytics, the accuracy of many predictive maintenance solutions remains below 50%, creating situations where teams respond to alerts only to find equipment operating normally. That isn't a failure of vibration analysis or thermography as methods. It's a failure of the data quality supporting them.

When the upstream inputs lack resolution, context, or consistency, even proven techniques produce outputs that teams can't trust (and won't) act on.

This article examines the variable that most condition-monitoring conversations overlook, which is the quality of the data itself. What it means in concrete terms, how it shapes the practical reach of every technique, what it costs when it falls short, and what the standard should be.

What Data Quality Means in Condition Monitoring

In condition monitoring, data quality has specific, measurable dimensions that directly determine what a technique can and can't reveal.

The term "data quality" in this context doesn't refer to clean databases or properly formatted fields. It refers to the characteristics of the captured signal and the metadata surrounding it.

Four dimensions of data quality

Four dimensions define whether the data feeding a condition monitoring technique is sufficient for reliable diagnosis.

One: Sampling Frequency

Sampling frequency determines how often readings are captured. A vibration reading taken every 60 minutes and one taken every 5 minutes represent the same technique applied to the same asset, but the outcomes diverge sharply. The 5-minute interval captures transient events, intermittent faults, and early-stage progression patterns that the hourly reading misses entirely. Rapid-onset faults can develop and escalate between 60-minute samples without ever appearing in the data.

Two: Sensor Resolution

Sensor resolution refers to the frequency range and sensitivity of the hardware. A sensor capped at 1 kHz can detect imbalance and late-stage bearing degradation, but it will miss the high-frequency signatures in the 10-32 kHz range associated with early bearing wear and gear mesh anomalies. The technique is the same. The hardware's ability to capture the relevant signal is not.

Three: Contextual Metadata

Contextual metadata provides the operating conditions surrounding each reading. Without real-time data on rotation speed, load, and ambient temperature, a vibration spike might indicate a bearing defect or simply reflect a change in operating speed. Context eliminates that ambiguity. Especially on variable-speed equipment, the absence of RPM data makes frequency-based fault identification unreliable because the fault frequencies shift with speed.

Four: Capture Consistency

Capture consistency determines whether data is collected reliably across operating states. Intermittent machines that cycle on and off throughout the day require intelligent capture that activates during operation, not fixed-interval sampling that may record idle equipment and produce baselines that don't reflect actual running conditions.

These four dimensions aren’t products of the technique. They're features of the data infrastructure supporting the technique. Two facilities running "vibration analysis" can have fundamentally different diagnostic capabilities based solely on how these upstream variables are configured.

How Data Quality Shapes the Capability Ceiling

Every condition monitoring technique has a theoretical capability range, but data quality determines how much of that range the implementation actually reaches.

As a method, vibration analysis can detect bearing wear, misalignment, imbalance, looseness, cavitation, gear defects, and dozens of other failure modes. That's the theoretical ceiling. Whether a given implementation reaches it depends entirely on the data layer beneath it.

Consider vibration analysis applied to a variable-speed pump. Without real-time RPM tracking, the system can't correlate vibration data with rotational speed. Fault frequencies shift with speed, and without that reference, the system can't distinguish normal speed-dependent variation from an emerging defect.

The result is either a generic alert that requires manual interpretation or, worse, a missed fault that didn't trigger any threshold because the frequency shifted outside the expected band. Add contextual RPM data, and the same vibration signal becomes interpretable. The system can identify that an increase in amplitude at a specific frequency correlates with a bearing defect at the current operating speed, providing a specific diagnosis rather than a vague notification.

The same dynamic applies to sensor resolution. Without high-frequency capture, the system detects faults only after they've progressed far enough to alter lower-frequency vibration patterns. By that point, the intervention window has narrowed considerably, and the repair scope has often expanded.

With broad-spectrum capture reaching into the 10-32 kHz range, the same technique detects those faults weeks or months earlier, when the fix is simpler, and scheduling flexibility is greater.

The technique doesn't change in any of these scenarios. The data quality changes, and with it, the technique's practical reach. Facilities that invest in condition-monitoring techniques without examining the data quality behind them may be operating well below the capability ceiling they assumed they had purchased.

The Hidden Cost of Insufficient Data Quality

When data can't be trusted, teams don't stop monitoring. They compensate with manual verification, creating invisible costs that undermine the program's ROI.

Poor data quality doesn't cause maintenance teams to abandon their condition monitoring programs. It causes them to build workarounds. These workarounds quickly become normalized patterns, and their costs become invisible because no one tracks them as a separate budget line.

Handheld verification routes

The most common compensating behavior is handheld verification routes. Technicians carry portable vibration collectors to "confirm" what the continuous monitoring system flagged. The continuous system was implemented specifically to replace those routes. When both run in parallel, the facility is paying for the monitoring program and the manual labor it was supposed to eliminate.

Senior analyst gatekeeping

A second pattern is senior analyst gatekeeping. Alerts flow through one or two experienced analysts who decide which are real and which are noise. This creates a bottleneck that delays response times and a single point of failure when those analysts are unavailable. It also means the monitoring system's value is capped by the bandwidth of a few individuals rather than scaling with the number of assets being monitored.

Conservative inaction

The third pattern is conservative inaction. When teams don't trust alerts, they default to waiting until a problem is visible, audible, or confirmed by other means. The monitoring system becomes a record-keeping tool rather than a decision tool. Failures that could have been caught early are allowed to progress because the data didn't carry enough confidence to justify action.

Accumulation of invisible costs

These costs accumulate across the asset portfolio without appearing in any program assessment. According to Deloitte, poor maintenance strategies can reduce a plant's overall productive capacity by 5 to 20 percent. When monitoring data can't be trusted, the facility bears the cost of the program and the cost of the failures the program should have prevented.

This brings us back to our original premise. You can ask whether using condition-monitoring techniques in your facility is important. A “yes” is a critical indicator of your competitive intent. But, a more valuable question in determining whether your efforts actually deliver the advantage you seek is this: “Is your condition monitoring saving labor or creating it by the quality of the data produced and used?”

What Decision-Grade Data Quality Requires

Decision-grade data quality is the threshold at which condition-monitoring outputs can be acted on without secondary verification.

Practically speaking, “decision-grade” doesn't mean perfect data or zero false positives. It means that the gap between data and action is closed. The data is good enough that a maintenance team can receive an alert, understand the diagnosis, and initiate action without pulling out a handheld analyzer or waiting for a specialist to review the finding.

Three requirements define this threshold.

- Sufficient resolution to distinguish failure modes. The data must enable the system to differentiate between conditions that produce similar symptoms but require different responses. Lubrication degradation and early bearing spalling both alter vibration patterns, but one requires regreasing, and the other requires bearing replacement. A system that can't distinguish between them produces alerts that require human interpretation to be actionable.

- Contextual intelligence that prevents false positives. Operating speed, load, ambient temperature, and machine state must be considered in the analysis. Without this context, normal operational variation triggers false alarms. False alarms don't just waste time on individual investigations. They train teams to ignore the system, which is the most expensive outcome because it also means real faults go ignored.

- Consistent capture that supports trend analysis. Reliable data over time enables the system to track degradation curves and estimate remaining useful life. Inconsistent or gap-filled data undermines trending and forces teams back into point-in-time assessments, which can't distinguish between a stable condition and an accelerating one.

When data meets this standard, the condition monitoring program works as intended. Techniques deliver their full capability range. Teams respond to alerts with confidence rather than skepticism. Expert bandwidth is preserved for genuinely complex cases rather than consumed by routine verification of findings that the system should have resolved on its own.

How Tractian Builds Data Quality Into Condition Monitoring

Tractian's condition monitoring platform delivers the resolution, context, and consistency required for decision-grade outputs.

The data quality standards described in this article are built into the Tractian hardware and software architecture. Smart Trac Ultra wireless vibration sensors capture triaxial vibration data up to 32 kHz every 5 minutes, providing the frequency range and sampling rate needed to detect early-stage faults that lower-resolution systems miss.

Tractian’s industrial-grade sensors cover the full diagnostic spectrum discussed in the capability ceiling section, ensuring that vibration analysis operates at its maximum potential rather than at a fraction of it.

Contextual intelligence is embedded at the point of capture. It’s the mechanism by which Tractian prevents the false positives that erode trust in condition monitoring programs.

Capture consistency is addressed through Always Listening, which activates data collection when intermittent machines are running and avoids the false baselines that fixed-interval sampling produces on cyclic equipment. Sub-GHz wireless connectivity with 4G/LTE cellular backhaul ensures data reaches the platform without relying on plant Wi-Fi, reducing a common point of failure.

Tractian’s automated failure mode diagnostics apply patented neural networks to all major failure modes, drawing on a bearing library of 70,000+ models to ensure fault-frequency calculations are accurate for the specific hardware installed. Three-tier benchmarking, comparing each asset against its own history, against facility peers, and against an anonymized global dataset, validates findings before they surface as alerts. The result is diagnostic output that teams can trust without secondary verification.

Because Tractian's condition monitoring operates within a unified platform that includes a native, AI-powered maintenance platform, trust converts directly into action. Diagnostic insights flow into work order management with AI-generated SOPs attached, closing the loop from detection to scheduled repair without manual handoff.

Explore Tractian condition monitoring solutions to see how decision-grade data quality enhances the performance of your condition monitoring techniques and transforms your maintenance team’s workflow.

Which Industries, Regardless of Condition Monitoring Techniques, Benefit from Higher-Quality Data Inputs?

Data quality determines the effectiveness of condition monitoring techniques wherever critical equipment runs, but certain operating contexts amplify the consequences when data falls short.

Lean maintenance teams, harsh environments, variable operating conditions, and high asset counts all reduce the margin for ambiguous alerts and manual verification. When data feeding condition-monitoring techniques lack resolution, context, or consistency, these environments absorb the hidden costs most acutely.

For these industries, the shift from basic signal capture to decision-grade data quality repositions maintenance teams to act on trusted diagnoses rather than compensating for data gaps with handheld routes and specialist interpretation.

- Automotive & Parts: High-speed production lines demand condition monitoring techniques that deliver clear diagnoses without interpretation delays, and data quality determines whether alerts support confident action or require manual verification that disrupts tight schedules.

- Fleet: Shop equipment supports vehicle turnaround, and condition-monitoring techniques only reduce downtime when the underlying data is accurate enough for technicians to trust alerts without requiring secondary confirmation across service bays.

- Manufacturing: Motors, pumps, and conveyors generate high data volumes, and without sufficient resolution and context, teams spend more time filtering noise than acting on the insights their condition monitoring techniques should deliver.

- Oil & Gas: Remote assets and hazardous environments make handheld verification impractical, requiring data quality high enough for condition-monitoring techniques to produce trusted diagnoses without on-site confirmation.

- Chemicals: Process stability depends on early intervention, and condition-monitoring techniques only prevent disruptions when the underlying data has enough context to distinguish real faults from normal operational variation.

- Food & Beverage: Tight schedules and sanitation requirements compress maintenance windows, making it essential that condition monitoring techniques operate on data precise enough to support immediate action without workflow delays.

- Mills & Agriculture: Seasonal processing creates high-stakes periods when condition-monitoring techniques must deliver prioritized, trustworthy outputs so that limited maintenance resources can focus on harvest-critical equipment first.

- Mining & Metals: Harsh conditions and heavy equipment generate complex vibration signatures, requiring data of sufficient quality for AI-driven diagnostics to distinguish genuine faults from environmental noise without specialist review.

- Heavy Equipment: Variable loads produce inconsistent baselines, making high-resolution contextual data essential for condition monitoring techniques to identify true anomalies and build the trust teams need to act decisively.

- Facilities: Distributed assets across multiple sites require centralized visibility with local relevance, and data quality determines whether condition monitoring techniques deliver prioritized guidance or raw signals that require manual translation.

FAQs: Frequently Asked Questions About Data Quality and Condition Monitoring Techniques

What are the most common condition monitoring techniques?

The most widely used techniques include vibration analysis, oil analysis, thermography, and ultrasound. Each monitors different indicators of equipment health. The effectiveness of any technique depends on the quality and context of the data captured, not just the method selected.

How does data quality affect condition monitoring accuracy?

Data quality directly determines whether a condition monitoring system can distinguish between failure modes, detect early-stage faults, and avoid false positives. Poor sampling frequency, limited sensor range, or missing operating context narrow a technique's practical capability, regardless of how advanced the method is.

What is decision-grade data quality?

Decision-grade data quality is the threshold at which condition-monitoring outputs can be acted on without requiring secondary manual verification. It requires sufficient resolution to differentiate failure modes, contextual metadata to prevent false positives, and consistent capture to support reliable trend analysis.

How does Tractian ensure high data quality for condition monitoring?

Tractian's Smart Trac Ultra sensor captures triaxial vibration data up to 32 kHz every 5 minutes, along with temperature, RPM, and runtime. Contextual intelligence features such as RPM Encoder™ and the Adaptable Temperature Algorithm provide the operating context needed to prevent misinterpretation and false alarms.

Can poor data quality increase maintenance costs?

Yes. When teams can't trust monitoring data, they compensate by using manual verification routes, handheld checks, and senior analyst reviews. These hidden costs accumulate across the asset portfolio and directly offset the savings the monitoring program was designed to deliver.

How does Tractian's condition monitoring connect to maintenance execution?

Tractian's condition monitoring platform integrates natively with its AI-powered CMMS. When an anomaly is detected and diagnosed, the insight flows directly into work order management with recommended procedures, eliminating the manual handoff between detection and action.